Fixing AWS Step Function errors due to reference to inactive AWS Batch Job Definition revisions

Problem Statement

Here at Tenchi Security, we use AWS Batch in conjunction with AWS Step Functions to execute security tests at scale in our product, Zanshin. This is part of our strategy to have a serverless stack, as discussed in this previous article.

AWS Batch allows you to run large-scale processing tasks without having to provision, manage, or manually scale any servers. AWS Step Functions, on the other hand, offers a way to orchestrate microservices, serverless applications, and container workflows visually and easily.

We, like many companies, use AWS Batch to execute container-based tasks in Step Functions at scale. However, a problem arises in practice when you use these services together in a dynamic environment like ours, where multiple executions and changes to the code occur frequently and they are being managed using CloudFormation. Non-obvious failures occur when new versions of a Job Definition in AWS Batch are deployed while a Step Function that uses it is running.

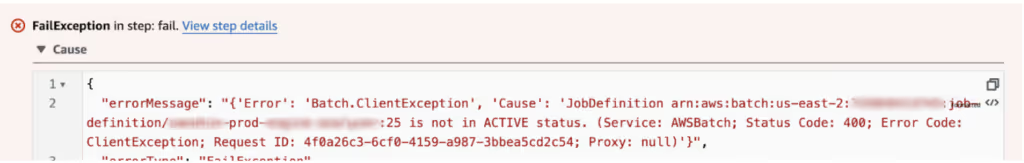

Specifically, if a Step Function task is initiated moments before a new deploy of a Job Definition, it can fail with the following error message:

This occurs because when CloudFormation updates a Job Definition it not only creates a new revision, but also flags all previous revisions as inactive. Which leads in-flight executions that rely on previous revisions to fail like the example above.

This is a CloudFormation “issue” that has been known for years. Keep in mind it also impacts any other systems or frameworks that rely on or are built on top of CloudFormation, such as Serverless Framework or the CDK.

In our case, this led to long-running security scans failing and needing to be re-executed with an updated Step Function spec that references the new Job Definition revision. The impacts were felt in customers having to wait longer for results to be made available, and increased costs due to duplicate scan step executions.

Workaround

As of the writing of this post, AWS does not have a solution for the root cause of this issue. Ideally CloudFormation should have an option to keep existing revisions active when a new one is created. However, in a recent contact with AWS Support they mentioned there is an issue for this in the CloudFormation roadmap, but couldn’t provide a target date for this being actually implemented. Worryingly, as of now this wasn’t yet assigned to any persons or projects in the roadmap Github repository.

The workaround for this problem involves not relying on new revisions, but instead creating entire new Job Definitions at each change and keeping the previous ones as long as they are needed.

To ensure that each new Job Definition is unique and does not conflict with previous versions, we can append a timestamp or anything that is updated with each change (container image tag, application version, etc) to the Job Definition name. This approach allows conflict-free creation and facilitates version management in the environment.

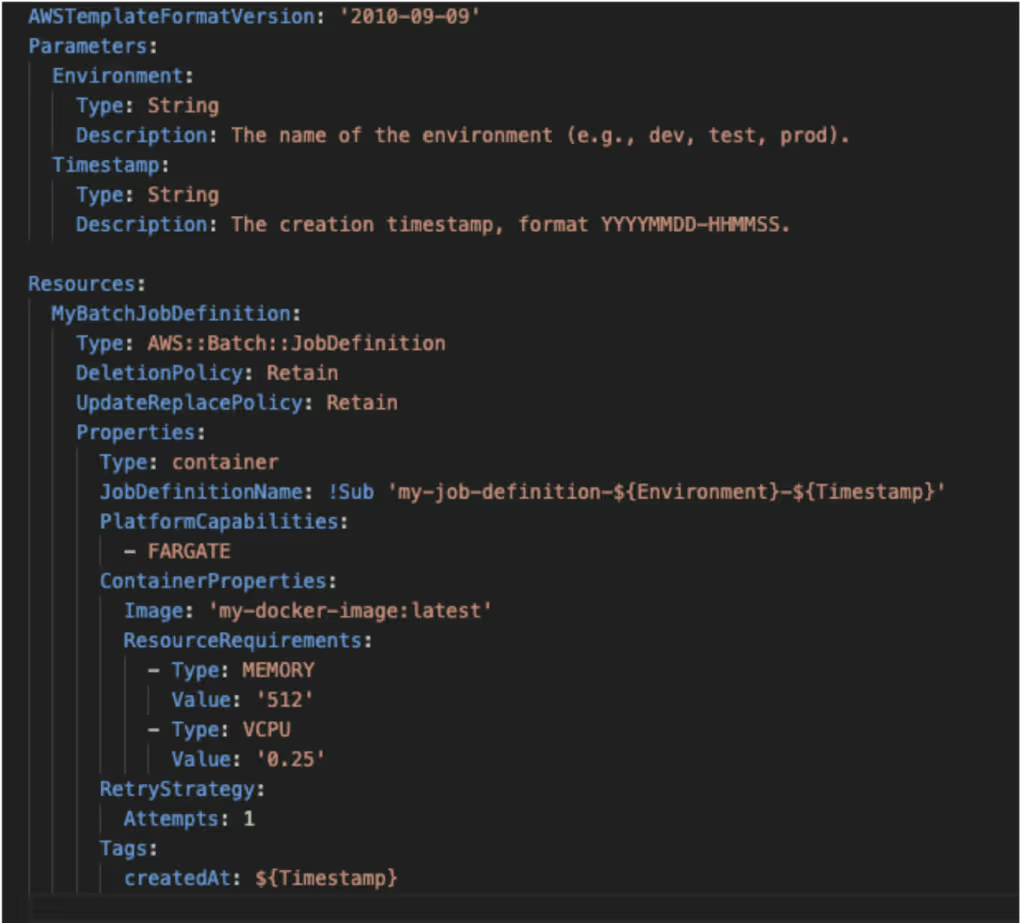

In order to do that, we strategically apply the properties DeletionPolicy: Retain and UpdateReplacePolicy: Retain. These properties ensure that, when updating the Job Definition, the previous version is preserved instead of deleted, while the new job definition is created with a different name.

In our scenario, we have opted to utilize the docker image tag as the suffix. This choice ensures that new Job Definitions are exclusively created when the container image version is updated. If the image version remains unchanged, there will be no difference in the Job Definition name.

If this was all we did, however, this approach would create a problem, as old Job Definitions would never be deleted. This could lead to unnecessary clutter, and might even create an impact if AWS were to enforce a limit on the number of Job Definitions per account or region.

Since the Job Definition resource does not have a field with the timestamp of their creation, we decided to add a resource tag with the contents of the “Created” field from the docker inspect command output. We can then use this field to delete any Job Definitions older than a set number of days as required.

Example of a CloudFormation Template for Job Definition

Below is a simplified example of a CloudFormation template that illustrates the creation of a Job Definition with the mentioned workaround. For simplicity’s sake, we are appending a timestamp to the Job Definition name.

Isso ocorre porque quando o CloudFormation atualiza um Job Definition, ele não apenas cria uma nova revisão, mas também marca todas as revisões anteriores como inativas. Esta inativação faz com que as execuções em andamento que dependem de revisões anteriores falhem, como no exemplo acima.

Este é um “problema” do CloudFormation que é conhecido há anos. Tenha em mente que isso também afeta outros sistemas ou estruturas que dependem ou são construídos com base no CloudFormation, como o Serverless Framework ou o CDK.

Em nosso caso, isso resultou em falhas de verificações de segurança em execução por longos períodos e precisando ser re-executadas com uma tarefa do Step Function atualizada que referencia a nova revisão do Job Definition. Os impactos foram sentidos pelos clientes que tiveram que esperar mais tempo para que os resultados fossem disponibilizados e houve um aumento nos custos devido às execuções duplicadas dos passos de verificação.

Solução Paliativa

Até o momento de escrita deste post, a AWS não tem uma solução para a causa raiz desse problema. Idealmente, o CloudFormation deveria ter uma opção para manter as revisões existentes ativas quando uma nova é criada. Em um contato recente com o suporte da AWS, eles mencionaram que existe uma issue para isso no roadmap do CloudFormation, mas não puderam fornecer uma data-alvo para sua implementação. Preocupantemente, até o momento, isso ainda não foi atribuído a nenhuma pessoa ou projeto no repositório do roadmap no GitHub.

A solução alternativa para este problema envolve não depender de novas revisões, mas sim criar novos Job Definitions a cada alteração e manter os anteriores enquanto forem necessários.

Para garantir que cada novo Job Definition seja único e não entre em conflito com versões anteriores, podemos anexar um carimbo de data e hora ou qualquer coisa que seja atualizada a cada alteração (tag da imagem do contêiner, versão da aplicação, etc.) ao nome do Job Definition. Essa abordagem permite a criação sem conflitos e facilita o gerenciamento de versões no ambiente.

Fazemos isso aplicando estrategicamente as propriedades DeletionPolicy: Retain e UpdateReplacePolicy: Retain. Essas propriedades garantem que, ao atualizar o Job Definition, a versão anterior seja preservada em vez de excluída, enquanto o novo job definition é criado com um nome diferente.

Em nosso cenário, optamos por utilizar a tag da imagem do docker como o sufixo. Essa escolha garante que novos Job Definitions sejam criados exclusivamente quando a versão da imagem do contêiner é atualizada. Se a versão da imagem permanecer inalterada, não haverá diferença no nome do Job Definition.

No entanto, essa abordagem necessita de atenção, pois os antigos Job Definitions nunca seriam excluídos podendo gerar problemas desnecessários e até mesmo criar um impacto se a AWS passasse a impor um limite no número de Definições de Tarefa por conta ou região.

Exemplo de um Template CloudFormation para um Job Definition

Temos aqui um exemplo simples de um template do CloudFormation que ilustra a criação de um Job Definition com a solução paliativa mencionada. De forma simplista, anexamos um carimbo de data e hora ao nome do Job Definition.

This approach ensures that each deployment creates a new Job Definition instance, keeping the environment stable and avoiding execution errors related to the revision statuses.

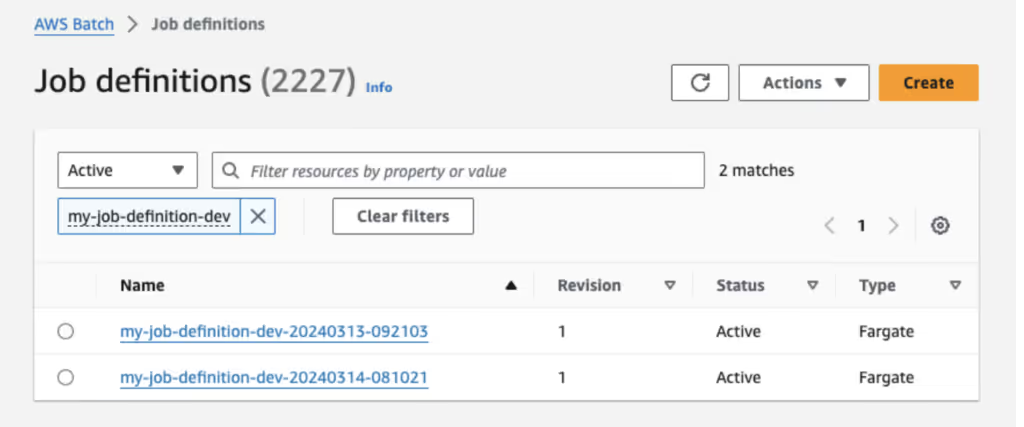

As a result, in our example, we’ll have two active Job Definitions with the same name prefix, but with different timestamps. Each is associated with a different container image version.

The image below shows the State Machine using revision 1 of Job Definition “my-jobdefinition-dev-20240313-081021”.

After updating the stack, the same State Machine now is using revision 1 of Job Definition “my-jobdefinition-dev-20240314-081021”.

Note that the revision number is always :1, due to the constant creation of new job definitions, avoiding the loss of references to deactivated revisions.

As for the automation to clean up old Job Definitions, we use the post build phase that is executed in the pipeline and runs a specific cleanup script. It checks the active Job Definitions related to the service being deployed, and analyzes the timestamp tag. It makes sure to keep the two latest Job Definitions, plus any others created in the last 24 hours (since our jobs do not last longer than that). It then proceeds to delete any other older Job Definitions.

This workaround mitigates errors in Step Functions when implementing new versions of Job Definition. While AWS does not offer an official solution for this specific case, this seems to be the best workaround. It is important to note that it will be necessary to clean up the environment to really deactivate the unused Job Definitions.

Credits

Luís F. Pontes