Reviewing Tenchi’s Tech Stack four years later

At Tenchi, we made a measured and deliberate decision when we first chose the tech stack for our product Zanshin. Back in 2019 when we first started, we only had an initial idea of what our product would be. It was not possible to anticipate its enormous success and how the market would evolve and what customers would demand from the product. However, we started with a pretty good foundation:

- Simplicity and no fuss. We knew we did not want to spend an epsilon of time configuring, fine-tuning and hardening vanilla, off-the-shelf servers.

- Scalability. No one builds a startup thinking the product will not sell. But still, many startups fall into the trap that we can “buy more capacity later”. This is only true if you design your system for scalability. We planned that from the start.

- Ignore vendor lock-in. This may seem counterintuitive. Not being locked-in to a single cloud provider, say GCP, Azure or AWS is in general a good thing. However, pretty much everybody knows this is a lot harder and time-consuming to achieve than it seems on the surface. And spending effort in building cloud-agnostic tools and infrastructure is a mistake for almost any startup and is certainly not something to worry about in the first stages.

- Security by design. As a security company, it is incumbent upon us to be even more secure than the most secure companies out there.

With these principles in mind, we chose our tech stack. And now, four years into it, it is time to look back and decide whether this tech stack was the correct choice and if not, what could have been better and how we plan to move forward.

The main decision we made was to use a serverless architecture and specifically the Serverless Framework, using AWS. This brought us the first three points above almost instantaneously. The fourth one, security, was certainly made simpler than if we had chosen to build our tech stack on more traditional virtual server architectures.

Unlike traditional server architecture, where developers must manage and provision servers, a serverless architecture offloads this responsibility to the cloud provider. This shift in focus allows developers to concentrate on writing code and building application logic without being bogged down by infrastructure management tasks.

To recap, this is what a serverless architecture offers in terms of pros and cons.

Pros of Serverless Architectures:

- Cost-Effective: Serverless architecture offers a pay-per-use model, meaning one only pays for the resources consumed. This eliminates the need to invest in upfront hardware costs or pay for spare server capacity, leading to significant cost savings and easing capacity planning.

- Scalability: Serverless applications scale automatically based on demand. As traffic increases, the cloud provider dynamically allocates additional resources to handle the surge, ensuring seamless performance and availability.

- Ease of Development: Engineering teams can focus on writing code and business logic without getting entangled in server provisioning and maintenance. This streamlined approach accelerates development cycles and enhances productivity.

- High Availability: Serverless architecture is inherently resilient due to its distributed nature. If a particular server instance fails, other instances seamlessly take over, ensuring uninterrupted application operation. Moreover, it is trivial to take advantage of multiple AWS availability zones so one’s application can even survive entire data center failures with ease.

- Security. In security, one fundamental task is keeping servers and networking infrastructure adequately sized, up-to-date and patched to the newest security standards. By offloading these basic tasks to the cloud provider and making the most out of the shared responsibility model, we avoid wasting engineering effort into undifferentiated, time-consuming and error-prone activities, making our lives simpler in the process.

Cons of Serverless Architectures:

- Cold Starts: When a serverless function has not been executed recently, it may experience a delay or “cold start” as the cloud provider spins up a new execution environment. This can impact performance for functions that are infrequently invoked. There are ways to mitigate this, for example by reserving some capacity to be always available. Unfortunately, this eats into the benefits of having a true serverless architecture (i.e. one might end paying for idle resources).

- Cost Unpredictability: While serverless architecture offers cost-effectiveness in general, applications with unpredictable usage patterns may incur unexpected charges. And such applications can be victims of Denial of Wallet attacks that end up costing a small fortune. This is a consequence of the automatic scaling that serverless uses by default, and limitations have to be designed in to ensure costs do not get out of control in an incident. Such limitations are not necessarily simple or straightforward.

- Debugging Complexity: Troubleshooting serverless applications can be more intricate due to the distributed and dynamic nature of the underlying infrastructure. There are ways to mitigate this as well. In general, this is not so much a con of the framework, but a con of creating microservices and using asynchronous processing based on task queues.

Here is how Tenchi deals with some of the cons.

Regarding cold starts, we set up our lambdas (the micro functions that serve our API requests) to have a fixed minimum availability. While this sets a floor to our cost, we maintain instant availability and keep the scalability on the way up.

For costs, we have internal controls (and are actively building more) to let us know where our expenditures are going. By focusing on the big-ticket items one by one we keep costs in check. We also have set up rate limits and request size limits to mitigate denial of wallet attacks, minimizing chances that we run into huge, unpredictable costs. More will be done by setting alarms that detect anomalies in traffic so that we can proactively investigate if they are legitimate or part of malicious attacks.

To minimize debugging complexity, we have a three-pronged approach: design it properly, document it, keep it simple. While some of our API operations require the use of asynchronous events to be processed in the background (because they are too long to handle during a short-lived web request), we keep them to a minimum, place them in well-documented places in the code, and continuously keep a lid on additional complexity by having senior engineers code review all changes that might increase complexity.

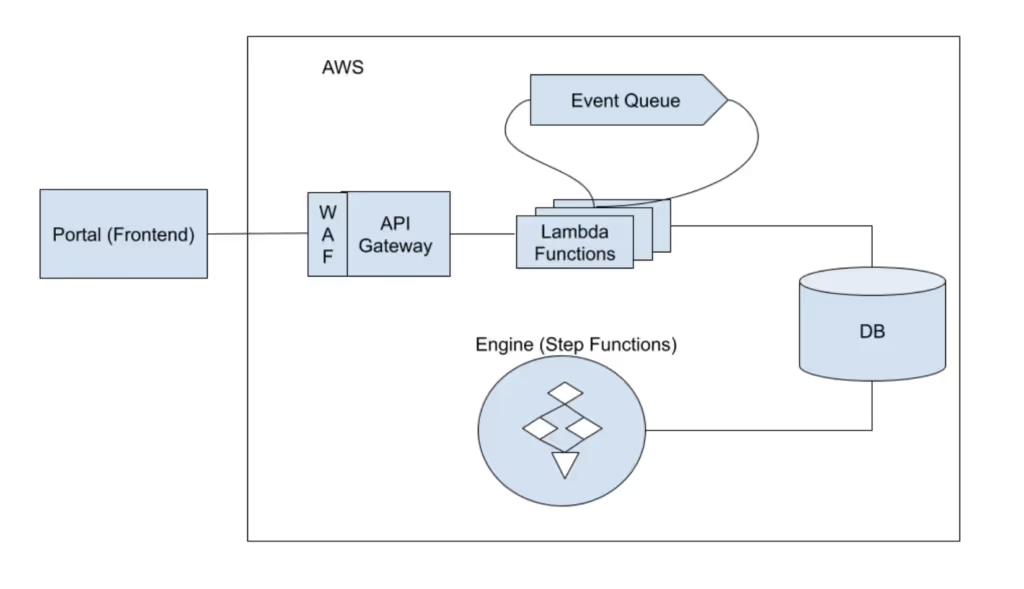

With that, here is a very simplified overview of our architecture and tech stack:

The frontend (our portal) is written in the Angular framework, all in TypeScript.

Our API is also written in TypeScript on top of AWS WAF, Amazon API Gateway and Lambda functions, interfacing with AWS systems like EventBridge.

Finally, our engine is responsible for over four thousand security scans that Zanshin executes daily on cloud environments and external attack surfaces of the companies on our platform. It runs on top of AWS Step Functions and is mostly written in Python.

So, there you have it, our full tech stack:

- Frontend: Angular, in TypeScript.

- API: API Gateway, Lambdas, EventBridge, in TypeScript.

- Engine: Step Functions (Lambdas and Batch jobs) in Python.

- Databases: DynamoDB and Aurora (Postgres).

Four years later, how did these choices turn out? Well, the choice of TypeScript for the frontend and API still makes a lot of sense. For one, the two teams work together and it is a lot simpler to context switch between API and Frontend when one is using the same language and tools.

Regarding Angular in particular, that is a choice that worked well for us. At the same time, Angular seems to be losing its appeal and popularity. It is a complex and very opinionated framework, which means the pool of developers out there that are comfortable in this environment is shrinking. It is still too soon to dismiss Angular and it has served us well so far, but we are also acutely aware that if we were to start over today, we probably would not use Angular anymore.

As for the Engine, we chose Step Functions due to its ease of setting up a flow system with stages, checks and balances and retries. We use Python because most security tools out there and the SDKs for the APIs we interact with are written in Python. And for that reason, most security experts, including the ones who currently work at Tenchi, are well-versed in Python. So, the language choice was a good one. However, it creates a situation where sharing code between the API and the Engine is tricky. To mitigate that, we have an internal API that makes the coupling between API and Engine independent.

If we were starting from scratch again, we likely would stick with one language for the entire stack, for ease of context switching and sharing code. It would be tempting to choose TypeScript again so that the frontend team and the API team could help each other efficiently like they do now while allowing the engine and the API to share business logic as libraries.

However, thinking this through some more, no code is shared between the frontend and the API, so using the same language is not a strong requirement. It is a specially muted point when one considers that most of our engineers can switch quite easily between a half-dozen languages.

The engine and the API, on the other hand, could benefit from using the same language, so that they could share common libraries, even though they run in slightly different environments. It would be tempting to choose Python for both or TypeScript for both. However, I would make the case that Node (the interpreter behind TypeScript) has such a high starting cost and big memory footprint that choosing something more efficient like Go would make more sense. We would save money on the Cold Start problem mentioned above. It would also make our lambdas “thinner” in the memory footprint department according to a recent study done by one of our engineers.

And for the security tools, we can always use Python in specific steps inside the Step Functions should a need arise. Furthermore, I expect that our security engineers will be writing less code going forward as we grow our software engineering talent.

Overall, most decisions four years ago were good ones and still stand today. Choosing a serverless architecture was definitely the most important decision we made. And even the smaller decisions that we would have changed today, like the choice of programming language for the various pieces and the frontend framework, are definitely not fatal and if anything are only minor drawbacks we need to deal with.

If you liked this post, make sure to come back for more. In a future post, I will talk about the Engineering Culture at Tenchi.

– Eduardo Pinheiro, Director of Software Engineering.